Best credit cards of February 2026

Advertiser Disclosure: Bankrate’s editorial team chooses and recommends the credit cards on this page. Our websites may earn compensation when a customer clicks on a link, when an application is approved, or when an account is opened. However, our recommendations and card ratings are produced independently without influence by advertising partnerships with issuers.

Filter by

Showing 15 results

Best standalone rewards card

on Chase's secure site

See Rates & FeesIntro offer

Earn $200 cash back

Rewards rate

1.5% - 5%

Annual fee

$0

Regular APR

18.24% - 27.74% Variable

Why you'll like this: Few cards offer a flat rewards rate this high in addition to bonus rewards in popular categories like dining and travel.

Reward Details

What you should know

Card Details

Best for balance transfers

on Wells Fargo's secure site

See Rates & FeesPurchase intro APR

0% intro APR for 21 months from account opening on purchases

Regular APR

17.49%, 23.99%, or 28.24% Variable APR

Intro offer

N/A

Rewards rate

N/A

Why you'll like this: It offers a long APR period on both new purchases and qualifying balance transfers, along with a below-average ongoing interest rate.

What you should know

Card Details

Limited time offer

$250 Capital One Travel Credit

Best for bonus offer

on Capital One's secure site

See Rates & FeesIntro offer

Enjoy a $250 travel credit & earn 75K bonus miles

Rewards rate

2X miles - 5X miles

Annual fee

$95

Regular APR

19.49% - 28.49% (Variable)

Why you'll like this: Its valuable sign-up bonus and travel credit add terrific short-term value to an already lucrative travel card.

Reward Details

What you should know

Card Details

Best cash back card for food

on Capital One's secure site

See Rates & FeesIntro offer

Earn a one-time $200 cash bonus

Rewards rate

1% - 8%

Annual fee

$0

Regular APR

18.49% - 28.49% (Variable)

Why you'll like this: It’s one of the only cards to earn excellent, unlimited rewards rates at both grocery stores and restaurants.

Reward Details

What you should know

Card Details

Apply with confidence

By applying, you can see if you're approved before impacting your credit

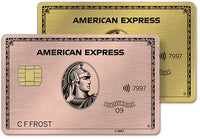

Best travel card for food

on American Express's secure site

See Rates & Fees, Terms ApplyIntro offer

As High As 100,000 points. Find Out Your Offer.

Rewards rate

1X - 4X

Annual fee

$325

APR

See Pay Over Time APR

Why you'll like this: Its dining perks and top-tier rewards rate at both supermarkets and restaurants make it a uniquely valuable travel card for foodies.

Reward Details

What you should know

Card Details

Best for affordable travel perks

on Capital One's secure site

See Rates & FeesIntro offer

Earn 75,000 bonus miles

Rewards rate

2 Miles - 10 Miles

Annual fee

$395

Regular APR

19.49% - 28.49% (Variable)

Why you'll like this: It offers mostly valuable, practical perks like annual travel credits and airport lounge access, making it a better deal than many other luxury cards.

Reward Details

What you should know

Card Details

Best luxury card for travel rewards

on Chase's secure site

See Rates & FeesIntro offer

125,000 bonus points

Rewards Rate

1x - 8x

Annual fee

$795

Regular APR

19.49% - 27.99% Variable

Why you'll like this: Jetsetters can rack up some of the most valuable rewards on the market with top-shelf travel rewards rates and perks.

Reward Details

What you should know

Card Details

Best travel card for rewards value

on Chase's secure site

See Rates & FeesIntro offer

75,000 bonus points

Rewards rate

1x - 5x

Annual fee

$95

Regular APR

19.24% - 27.49% Variable

Why you'll like this: It boasts terrific rewards value with its annual bonus points, practical bonus categories, high-value points and redemption flexibility.

Reward Details

What you should know

Card Details

Apply with confidence

By applying, you can see if you're approved before impacting your credit

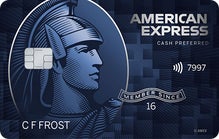

Best rewards card for groceries

on American Express's secure site

See Rates & Fees, Terms ApplyIntro offer

Earn $250

Rewards Rate

1% - 6%

Annual fee

$0 intro annual fee for the first year, then $95.

APR

19.49%-28.49% Variable

Why you'll like this: This card’s chart-topping U.S. supermarket cash back rate (and rates in other key categories) can make it a de facto choice for big grocery spenders.

Reward Details

What you should know

Card Details

Best for existing U.S. Bank account holders

on U.S. Bank's secure site

See Rates & FeesIntro offer

N/A

Rewards Rate

2%

Annual fee

$0

Regular APR

17.74% - 27.99% Variable

Why you'll like this: If you’re a U.S. Bank loyalist with significant savings, this card can offer outstanding cash back rewards on all purchases.

Reward Details

What you should know

Card Details

on Amazon's secure site

See Rates & FeesIntro offer

Get a $150 Amazon Gift Card

Rewards rate

1% - 10%

Annual fee

$0

Regular APR

18.74% - 27.49% Variable

Why you'll like this: As a Prime member, you’ll earn great cash back rates on Amazon and Whole Foods purchases.

Reward Details

What you should know

Card Details

Apply with confidence

By applying, you can see if you're approved before impacting your credit

Best luxury card for travel perks

on American Express's secure site

See Rates & Fees, Terms ApplyIntro offer

As High As 175,000 points. Find Out Your Offer.

Rewards rate

5X

Annual fee

$895

APR

See Pay Over Time APR

Why you'll like this: It boasts the most comprehensive airport lounge access you can get, plus dozens of other top-tier travel perks and credits totaling nearly $2,000 in value.

Reward Details

What you should know

Card Details

Best for fair credit

Intro offer

N/A

Rewards Rate

1.5% - 5%

Annual fee

$39

Regular APR

28.99% (Variable)

Why you'll like this: It earns rewards while helping you build credit.

Reward Details

What you should know

Card Details

Best low-cost secured card

Intro offer

N/A

Rewards Rate

N/A

Annual fee

$0

Regular APR

28.99% (Variable)

Why you'll like this: You could potentially get a minimum $200 credit line with a deposit starting at $49, depending on your creditworthiness.

What you should know

Card Details

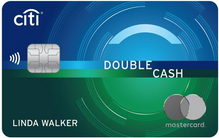

Best flat-rate cash back card

Intro offer

$200 cash back

Rewards Rate

2% - 5%

Annual fee

$0

Regular APR

17.49% - 27.49% (Variable)

Why you'll like this: It earns one of the best cash rewards rates on all eligible purchases, and sets itself apart from rivals with its stellar balance transfer offer and card pairing opportunities.

Reward Details

What you should know

Card Details

Remove a card to add another to compare

Remove a card to add another to compare

Why we ask for feedback

Your feedback helps us improve our content and services. It takes less than a minute to complete.

Your responses are anonymous and will only be used for improving our website.

Compare Bankrate's best credit cards of 2026

| Card name | Our pick for | Card highlights | Ongoing APR |

Bankrate Score

|

|---|---|---|---|---|

|

Standalone rewards card |

5% cash back on travel booked through Chase Travel℠ 3% cash back on dining and drugstore purchases 1.5% cash back on all other purchases No annual fee |

Regular APR: 18.24% - 27.74% Variable

|

5.0 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Chase's secure site

|

|

|

Balance transfers |

0% intro APR for 21 months from account opening on qualifying balance transfers (within the first 120 days) and purchases

(The regular APR will apply thereafter; 5% balance transfer fee, $5 minimum)

No annual fee |

Regular APR: 17.49%, 23.99%, or 28.24% Variable APR

|

4.3 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Wells Fargo's secure site

|

|

|

Bonus offers |

Earn 5X miles per dollar on hotels, vacation rentals and rental cars booked through Capital One Travel

Earn 2X miles per dollar on all other purchases

$95 annual fee

|

Regular APR: 19.49% - 28.49% (Variable)

|

5.0 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Capital One's secure site

|

|

|

Cash back card for food |

Earn 8% cash back on Capital One Entertainment purchases

Earn unlimited 5% cash back on hotels, vacation rentals and rental cars booked through Capital One Travel

Earn unlimited 3% cash back at grocery stores (excluding superstores like Walmart® and Target®), on dining, entertainment and popular streaming services

Earn 1% cash back on all other purchases |

Regular APR: 18.49% - 28.49% (Variable)

|

5.0 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Capital One's secure site

|

|

|

Travel card for food |

4X points on restaurants worldwide (on up to $50,000 in purchases per year, then 1x points) and U.S. supermarket purchases (on up to $25,000 in purchases per year, then 1X points) 3X points on flights booked directly with airlines or via Amex Travel Over $400 in various credits in monthly Uber Cash and credits toward eligible dining purchases (Uber Cash expires at the end of the month. Enrollment required for all credits.) $325 annual fee |

Regular APR: See Pay Over Time APR

|

4.9 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on American Express's secure site

See Rates & Fees

, Terms Apply

|

|

|

Affordable travel perks |

10X miles on hotel and rental car bookings through Capital One Travel

5X miles on flights and vacation rentals booked through Capital One Travel

2X miles on all other purchases

Annual $300 Capital One Travel credit and 10,000 account anniversary bonus miles can help offset part of the annual fee

$395 annual fee |

Regular APR: 19.49% - 28.49% (Variable)

|

5.0 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Capital One's secure site

|

|

|

Luxury card for travel rewards |

8X points on Chase Travel℠ bookings 4X points on flights and hotel stays booked directly with airlines and hotels 3X points on dining Top-value travel perks available, including almost $1,700 of recurring perks toward eligible travel and dining, plus Priority Pass Select airport lounge membership Additional Southwest Airlines, IHG One and The Shops at Chase perks for the remainder of each calendar year you spend $75,000 $795 annual fee |

Regular APR: 19.49% - 27.99% Variable

|

4.8 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Chase's secure site

|

|

|

Travel rewards value |

3X points on online grocery purchases (excluding Walmart, Target and wholesale clubs), dining (including eligible delivery services) and select streaming services 5X points on Chase Travel℠ and Lyft Rides (Lyft offer through September 30, 2027) 2X points on other travel Yearly bonus points and credits: $50 annual hotel stay credit and 10% back on your previous year's total combined spending points $95 annual fee |

Regular APR: 19.24% - 27.49% Variable

|

4.9 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Chase's secure site

|

|

|

Grocery rewards card |

6% cash back at U.S. supermarkets on up to $6,000 per year in eligible purchases (then 1%), and on select U.S. streaming subscriptions

3% cash back at eligible U.S. gas stations and on transit (including taxis/rideshare, parking, tolls, trains, buses and more)

Up to $120 annually in Disney streaming subscription credits (up to $10 each month toward eligible subscriptions and bundles at Disneyplus.com, Hulu.com, Plus.espn.com U.S. websites)

$95 annual fee ($0 intro annual fee the first year) |

Regular APR: 19.49%-28.49% Variable

|

4.4 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on American Express's secure site

See Rates & Fees

, Terms Apply

|

|

|

U.S. Bank loyalists |

Unlimited 2% cash back

Up to an additional 2% cash back on your first $10,000 when paired with a U.S. Bank Smartly® Savings account (terms and conditions apply)

No annual fee

|

Regular APR: 17.74% - 27.99% Variable

|

3.9 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on U.S. Bank's secure site

|

|

|

Amazon Prime members |

10% cash back or more on a rotating selection of items and categories on Amazon.com with an eligible Prime membership

5% cash back at Amazon.com, Amazon Fresh, Whole Foods Market, and on Chase Travel purchases with an eligible Prime membership

2% cash back at gas stations, restaurants, and on local transit and commuting (including rideshare)

No annual fee

|

Regular APR: 18.74% - 27.49% Variable

|

4.5 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Amazon's secure site

|

|

|

Luxury card for travel perks |

5X points on directly-booked airfare and flights and prepaid hotels booked through American Express Travel® (on up to $500,000 spent on flights per calendar year) 2X points on prepaid car rentals, vacation packages and cruise reservations through American Express Travel® Top-value travel perks available, including around $3,500 of recurring monthly and annual credits plus comprehensive airport lounge access $895 annual fee |

Regular APR: See Pay Over Time APR

|

4.8 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on American Express's secure site

See Rates & Fees

, Terms Apply

|

|

|

Fair credit |

Automatic account review starting at 6 months to be considered for a higher credit line 5% cash back on hotels and rental cars booked through Capital One Travel 1.5% cash back on all other purchases $39 annual fee |

Regular APR: 28.99% (Variable)

|

4.0 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Capital One's secure site

|

|

|

Low-cost secured card |

Refundable security deposit starting at $49 to get at least a $200 initial credit line Automatic account review starting at 6 months to be considered for a higher credit line No annual fee |

Regular APR: 28.99% (Variable)

|

4.1 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Capital One's secure site

|

|

|

Flat-rate cash back |

5% cash back on hotels, car rentals and attractions booked through the Citi Travel® portal 2% cash back: 1% when you buy, 1% when you pay

0% intro APR for 18 months on balance transfers (transfers must be within the first four months)

No annual fee

|

Regular APR: 17.49% - 27.49% (Variable)

|

4.2 / 5 Our writers, editors and industry experts score credit cards based on a variety of factors including card features, bonus offers and independent research. Credit card issuers have no say or influence on how we rate cards.

Apply now

on Citi's secure site

|

The future of rate shopping

We’re building something new to make rate shopping smarter and simpler. Join the waitlist to get early access.

Limited spots available

The future of rate shopping

We’re building something new to make rate shopping smarter and simpler. Join the waitlist to get early access.

Limited spots available

You’re on the list!

You're all set! We'll be in touch soon with personalized options just for you.